This week the New York Times published a fascinating look at the latest iteration of Tesla’s automated driving technology, which the company calls “Full Self Driving.” Reporters and videographers spent a day riding with Tesla owner Chuck Cook, an airline pilot who lives in Jacksonville, Florida and has been granted early access to the new technology as a beta tester. What they found was, to my eye anyway, disturbing.

This week the New York Times published a fascinating look at the latest iteration of Tesla’s automated driving technology, which the company calls “Full Self Driving.” Reporters and videographers spent a day riding with Tesla owner Chuck Cook, an airline pilot who lives in Jacksonville, Florida and has been granted early access to the new technology as a beta tester. What they found was, to my eye anyway, disturbing.

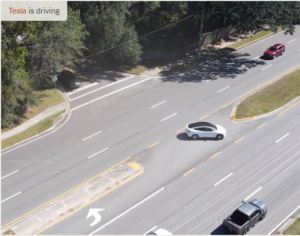

Mr. Cook’s Tesla navigated a broad range of city streets, selecting a route to a destination, recognizing and reacting to other cars, seeing and understanding traffic lights, and even making unprotected left-hand turns—a routine situation that autonomous vehicles struggle to handle. But the car also behaved erratically at times, requiring Mr. Cook to take over and correct its course. In one instance it veered off the street and into a motel parking lot, almost hitting a parked car. In another, it tried to make a left turn onto a quiet street but then, fooled by shade and branches from an overhanging tree, aborted the turn and ended up heading into oncoming traffic on a divided street. These incidents occurred in a single day of testing.

It is worth considering the experience of the Times reporters in the broader context of autonomous vehicle development, something the article largely fails to do. Teslas operating in “Autopilot”—the earlier version of the company’s autonomous technology, which is only supposed to be used on divided, limited access highways—have already killed roughly a dozen people in the United States. Tesla is currently being investigated by the NHTSA, and it has been reported that the Department of Justice has opened a criminal investigation. The California Department of Motor Vehicles has filed a complaint against Tesla that could result in the revocation of its license to sell cars in the State.

Meanwhile, the victims of several of the fatal crashes involving Autopilot have filed suit against Tesla. None of these suits has yet been resolved. In many of the cases, the victims were drivers who plainly ignored Tesla’s repeated instructions to monitor the road at all times. It is thus possible that Tesla will have strong comparative fault arguments to make at trial. On the other hand, there have already been a number of victims who were not driving Teslas themselves.

The fact that drivers find themselves unable to pay attention while their Teslas whisk them around is, to experts, totally unsurprising. Studies have found that humans are notoriously bad at tasks requiring “passive vigilance.” The NTSB, in its own investigations of several fatal collisions involving Teslas, has been flagging this problem for years, and has noted that the system Tesla uses to ensure driver attentiveness, detecting hands on the wheel, is plainly inadequate.

Full Self Driving may be a charmingly madcap taste of the future when the car is being driven by an airline pilot like Chuck Cook. But most of us are much worse at paying attention with nothing to do. Indeed, several Tesla owners have already paid for these failures with their lives. With the driver attentiveness system unchanged in Full Self Driving, it seems clear that more accidents, and probably more liability, lie ahead.

Not that the makers shouldn’t strive to improve the systems, but…

“Teslas operating in ‘Autopilot’. . . have already killed roughly a dozen people in the United States.”

How does that compare to human drivers over the same amount of miles driven?