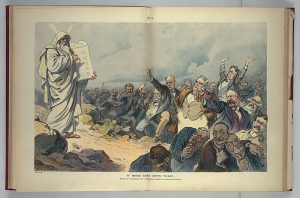

A Bible for AI: The Need for Ethics in AI and Emerging Technologies

Recently, I attended the Compliance & Ethics Institute of the SCCE in Las Vegas. One of the keynote speakers was Amber Mac, a well-known public speaker for business innovation, internet of things, online safety, artificial intelligence (AI), and other topics. That morning, her keynote address was titled “Artificial Intelligence: A Day in Your Life in Compliance & Ethics.”

Recently, I attended the Compliance & Ethics Institute of the SCCE in Las Vegas. One of the keynote speakers was Amber Mac, a well-known public speaker for business innovation, internet of things, online safety, artificial intelligence (AI), and other topics. That morning, her keynote address was titled “Artificial Intelligence: A Day in Your Life in Compliance & Ethics.”

It was completely mind-blowing.

From her comments, I had a profound realization that ethics will be extremely important for AI and other emerging technologies as society progresses towards integrating these technologies into our daily lives. Note that this integration is starting to be, or is already, in our homes and workplaces. “Alexa” might already be part of your family. This development is growing in an exponential rate, and there’s no slowing it down. In fact, Waymo (the self-driving subsidiary of Google parent Alphabet) is launching the first ever commercial driverless car service next month. Yet, have we stopped to consider if an ethical “backbone” to all of this progress should be put in place as a guide for AI and all emerging technologies?

For example, a few years ago Microsoft released an AI chatbot on Twitter where the AI robot named Tay would learn from conversations it had. The goal was that the AI would progressively get “smarter” as it discussed these topics with regular people over the Internet. However, the project was an embarrassment. In no time, Tay blurted out racist slurs, defended white supremacists and even advocated for genocide. So, how did this happen? Well, the problem was that Tay’s learning was not supported with proper ethical guidance. Without proper guidance, such as the difference between truth and falsehood or the general knowledge of the existence of racism, it was vulnerable to learning unethical thought and behavior.